Nick SchuchOperations Lead

Continuous integration platforms are a vital component of any development shop. We rely on it heavily to keep projects at the quality they deserve. Being early adopters of Docker (0.7.6) for our QA and Staging platform we thought it was time to take our CI environment to the next level!

So first a little bit about the platforms.

This original environment was powered by Jenkins which is awesome, Jenkins is a powerful CI with many community contributed plugins, however, we were running 2 environments per project. These were:

This has caused a few issues, the main issue being we would occasionaly have left over files and services such as solr still indexed. This is a huge issue when it comes to ensuring consistent builds. Our other major issue was this infrastructure was on a single host which meant it didn't scale very well (if at all), builds would have to come to a stop for us to turn off the host and increase resources.

We went into the development of this new infrastructure with the following goals:

As you can see we had some ambitious goals, and we were able to achieve them!

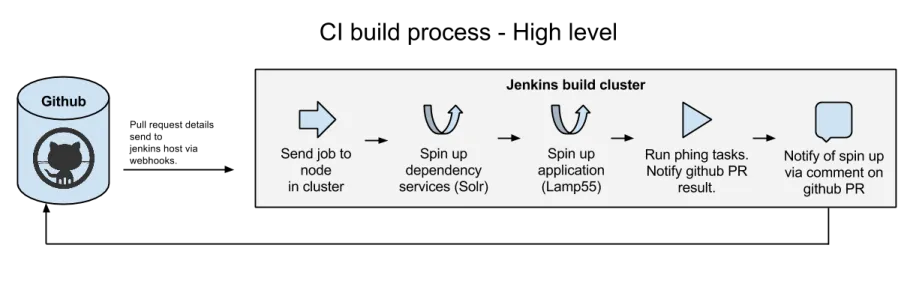

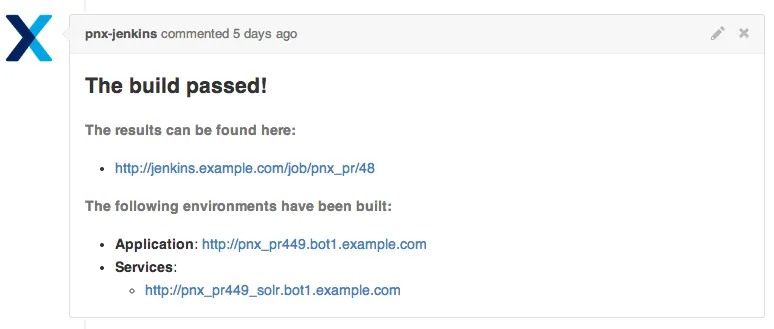

So let's look into this build process a little more. Some key takeaways from this is:

So as you might have already guessed we are using Docker under the hood but what about the glue that holds it together. We utilize the following technologies:

builds:

pnx_pr:

human_name: 'PNX: Pull request'

description: 'Triggered by Github.'

project: 'pnx'

github_project: 'previousnext/pnx'

application: 'previousnext/lamp55'

steps:

- 'phing prepare'

- 'phing test'

To get a good start at Jenkins and Docker go check out The Docker Book. It was released very recently and is a great source for getting started with Docker and how to intergrate it with Jenkins.

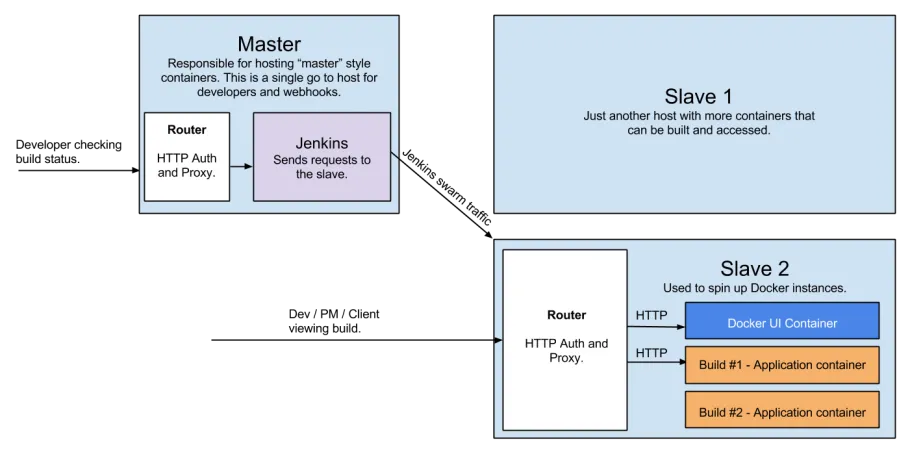

Below is a diagram of a slave used for builds. It depicts how all these technologies work together.

What we have achieved in such a short period of time is making a big difference. We now not only have more consistent builds (and everything else discussed above), we also have a CI framework that has opened up more than just testing possibilities (will cover in future blog posts). Until then if you are looking for a better way to do CI, I am happy to say, this is a great option.