Nick SchuchOperations Lead

Docker is reinventing the way we package and deploy our applications, bringing new challenges to hosting. In this blog post I will provide a recipe for logging your Docker packaged applications.

Going into this I had 2 major goals:

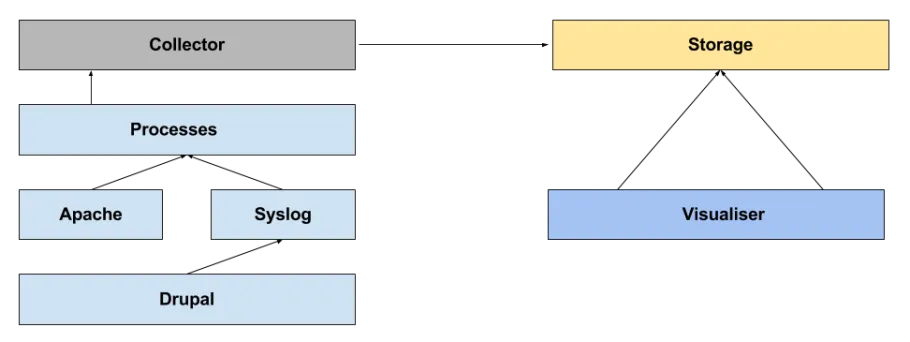

The following are the components which make up a standard logging pipeline.

I have started with the most core piece of the puzzle, the storage.

The component is in charge of:

Some open source options:

Some services where you can get this right out of the box:

These services don't require you to run Docker container based hosting. You can run these right now on your existing infrastructure.

However, they do become a key component when hosting Docker-based infrastructure because we are constantly rolling out new containers in place of the old ones.

This is an extremely simple service tasked with the job to collect all the logs and push them to the remote service.

Don't confuse simple with important though. I highly recommend you setup monitoring for this component.

On most occasions the "storage" component provides an interface for interacting with the logged data.

In addition we can also write applications to consume the "storage" components API and provide a command line experience.

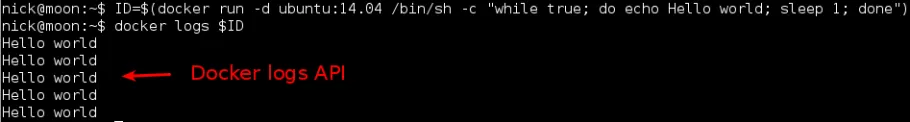

So how do we implement these components in a Docker hosting world? The key to our implementation is the Docker API.

In the below example we have:

What this means, is that we can pick up all the logs for a service IF the services inside the container are printing to STDOUT instead of logging to a file.

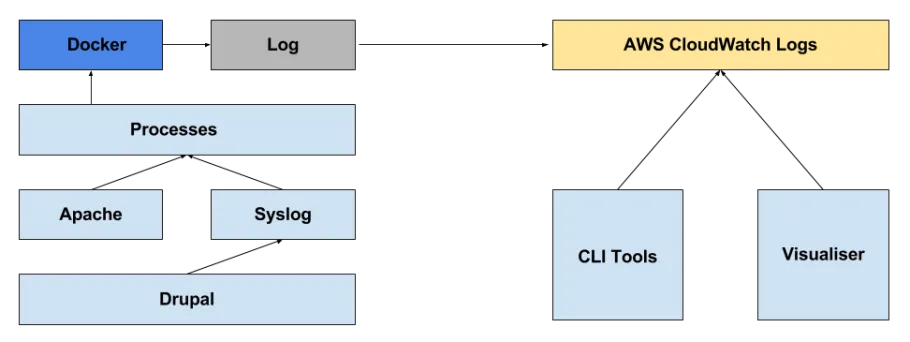

With this in mind, we developed the following logs pipeline, and open sourced some of the components:

I feel like we have achieved a lot by doing this.

Here are some takeaways: